Tunnel interfaces introduction

Contents

Introduction

“An IP tunnel is an Internet Protocol (IP) network communications channel between two networks. It is used to transport another network protocol by encapsulation of its packets.” quote from Wikipedia.

Linux has supported many kinds of tunnels, but new users often get confused

by their differences and about which one is best suited for a given use case.

In this post, I will give a brief introduction for commonly used tunnel interfaces

in the Linux kernel. There is no code analysis, only a brief introduction to the

interfaces and their usage on Linux. Anyone with a network background might be

interested in this blog post. A list of tunnel interfaces can be obtained by

issuing the iproute2 command ip link help. Help on specific tunnel

configuration can also be obtained with the command ip link help <tunnel_type>.

Tunnels

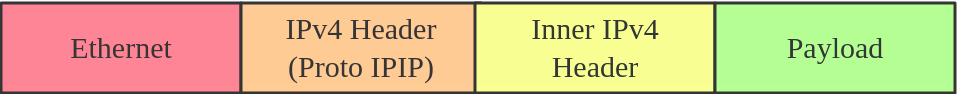

IPIP Tunnel

IPIP tunnel, just as the name suggests, is an IP over IP tunnel, defined

in RFC 2003. The IPIP tunnel

header looks like

It’s typically employed to connect two internal IPv4 subnets through public IPv4 internet.

It has the lowest overhead but can only transmit IPv4 unicast traffic. So you can not send multicast via IPIP tunnel.

IPIP tunnel supports both IP over IP and MPLS over IP.

Note: When the ipip module is loaded, or an IPIP device is created for the first time,

the Linux kernel will create a tunl0 default device in each namespace, with

attributes local=any and remote=any. When

receiving IPIP protocol packets, the kernel will forward them to tunl0 as a

fallback device if it can’t find another device whose local/remote attributes

match their source or destination address more closely.

How to create an IPIP tunnel

| |

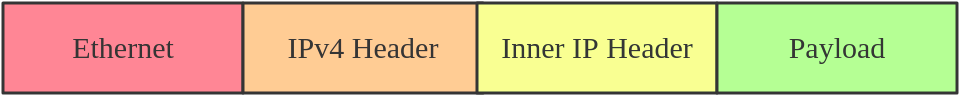

SIT Tunnel

SIT stands for Simple Internet Transition. The main purpose is to interconnect isolated IPv6 networks, located in global IPv4 Internet.

Initially, it only had an IPv6 over IPv4 tunneling mode. After years of development, it acquired support for several different modes, such as ipip (the same with IPIP tunnel), ip6ip, mplsip, any. Mode any is used to accept both IP and IPv6 traffic, which may prove useful in some deployments. SIT tunnel also supports ISATAP and here comes a usage example.

The SIT tunnel header looks like

When the sit module is loaded, the Linux kernel will create a default device, named sit0.

How to create a SIT tunnel

| |

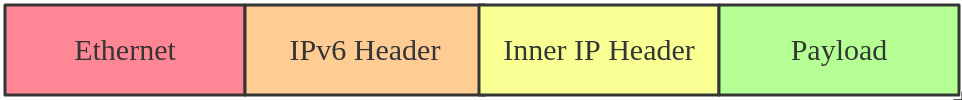

ip6tnl Tunnel

ip6tnl is an IPv4/IPv6 over IPv6 tunnel interface, which looks like an IPv6

version of the SIT tunnel. The tunnel header looks like

ip6tnl supports modes ip6ip6, ipip6, any. ipip6 is IPv4 over IPv6, ip6ip6 is IPv6 over IPv6, and mode any supports both IPv4/IPv6 over IPv6.

When the ip6tnl module is loaded, the Linux kernel will create a default device, named ip6tnl0.

How to create an ip6tnl tunnel

| |

VTI/VTI6

VTI (virtual Tunnel Interface) on Linux is similar to Cisco’s VTI and Juniper’s implementation of secure tunnel (st.xx).

This particular tunneling driver implements IP encapsulations, which can be used with xfrm to give the notion of a secure tunnel and then use kernel routing on top.

In general, VTI tunnels operate almost in the same way as ipip or sit tunnels, except that they add a fwmark and IPsec encapsulation/decapsulation.

VTI6 is the IPv6 equivalent of VTI.

How to create a VTI tunnel

| |

You can also config IPsec via libreswan or strongswan.

XFRM

XFRM interface is added in 4.19 kernel via f203b76d78092faf2 (“xfrm: Add virtual xfrm interfaces”). The purpose of these interfaces is to overcome the design limitations that the existing VTI devices have.

The main limitations that we see with the current VTI are the following:

- VTI interfaces are L3 tunnels with configurable endpoints. we can have only one VTI tunnel with wildcard src/dst tunnel endpoints in the system.

- VTI needs separate interfaces for IPv4 and IPv6 tunnels.

- VTI works just with tunnel mode SAs.

- VTI is configured with a combination GRE keys and xfrm marks.

To overcome these limits, now we have xfrm interface, which could

- It’s possible to tunnel IPv4 and IPv6 through the same interface

- No limitation on xfrm mode (tunnel, transport and beet).

- It’s a generic virtual interface that ensures IPsec transformation

For more details, please see this article

How to create a XFRM tunnel

| |

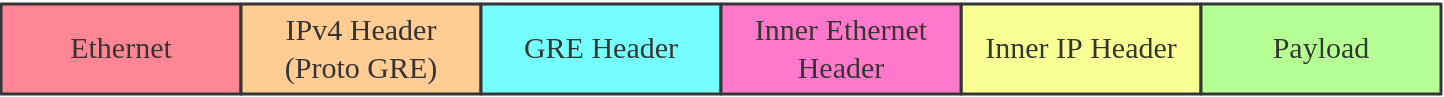

GRE/GRETAP

Generic Routing Encapsulation, also known as GRE, is defined in RFC 2784

GRE tunnel adding an additional GRE header between the inside and outside IP headers.

In theory, GRE could encapsulate any layer three protocol with a valid

Ether type, unlike IPIP, which can only encapsulate IP. The GRE header looks

like

Note that you can transport multicast traffic and IPv6 through a GRE tunnel.

When the gre module is loaded, the Linux kernel will create a default device, named gre0.

How to create a GRE tunnel

| |

While GRE tunnels operate at OSI layer three. GRETAP works at OSI layer two,

which mean there is an ether header in inner header.

How to create a GRETAP tunnel

| |

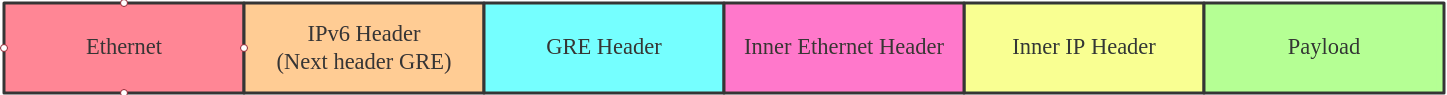

GRE6/GRE6TAP

GRE6 is the IPv6 equivalent of GRE, that allows us to encapsulate any layer three protocol

over IPv6. The tunnel header looks like

GRE6TAP, just list GRETAP, has an Ethernet header in the inner header

How to create a GRE tunnel

| |

FOU

Tunneling can happen at multiple levels in the networking stack. IPIP, SIT, GRE are at the IP level, while the FOU (foo over UDP) is a UDP-level tunneling.

There are some advantages of using UDP tunneling as UDP works with existing HW infrastructure, like RSS in NICs, ECMP in switches, and checksum offload. The developer’s patch set shows significant performance increases for the SIT and IPIP protocols.

Currently, the FOU tunnel supports encapsulation protocol based on IPIP, SIT, GRE.

An example FOU header looks like

How to create a FOU tunnel

| |

The first command configured a FOU receive port for IPIP bound to 5555, for

GRE you need set ipproto 47. The second command set up a new IPIP virtual

interface (tun1) configured for FOU encapsulation, with dest port 5555.

NOTE: FOU is not supported in RHEL

GUE

GUE (Generic UDP Encapsulation) is another kind of UDP tunneling. The difference between FOU and GUE is GUE has its own encapsulation header (GUE), which contains the protocol info and other data.

The GUE tunnel header looks very similar to VXLAN, but GUE tunnel supports inner IPIP, SIT, GRE encapsulation.

An example GUE header looks like

How to create a GUE tunnel

| |

This will set up a GUE receive port for IPIP bound to 5555, and an IPIP tunnel configured for GUE encapsulation.

NOTE: GUE is not supported in RHEL

GENEVE

GENEVE (Generic Network Virtualization Encapsulation) supports all of the

capabilities of VXLAN, NVGRE and STT, and was designed to overcome their

perceived limitations. Many believe GENEVE could eventually

replace these earlier formats entirely. The tunnel header looks like

which looks very similar to

VXLAN.

The main difference is that the GENEVE header is flexible. It’s very easy to add new

features by extending the header with a new TLV (Type-Length-Value) field. Here is the

latest geneve ietf draft.

For more details, you can refer to a blog post specifically focused on what’s geneve.

which looks very similar to

VXLAN.

The main difference is that the GENEVE header is flexible. It’s very easy to add new

features by extending the header with a new TLV (Type-Length-Value) field. Here is the

latest geneve ietf draft.

For more details, you can refer to a blog post specifically focused on what’s geneve.

OVN use GENEVE as default encapsulation.

How to create a GENEVE tunnel

| |

ERSPAN/IP6ERSPAN

ERSPAN (Encapsulated Remote Switched Port Analyzer) uses GRE encapsulation to

extend the basic port mirroring capability from Layer 2 to Layer 3,

which allows the mirrored traffic to be sent through a routable IP network.

The ERSPAN header looks like

The ERSPAN tunnel allows a Linux host to act as an ERSPAN traffic source and send the ERSPAN mirrored traffic to a remote host, or to an ERSPAN destination which receives and parses the ERSPAN packets generated from Cisco or other ERSPAN-capable switches. This could be used to analyze, diagnose, detect malicious traffic.

Linux currently supports most features of two ERSPAN versions, v1 (type II) and v2 (type III).

How to create an ERSPAN tunnel

| |

Summary

Here is a summary of all the tunnels we introduced.

| Tunnel/Link Type | Outer Header | Encapsulate Header | Inner Header |

|---|---|---|---|

| ipip | IPv4 | None | IPv4 |

| sit | IPv4 | None | IPv4/IPv6 |

| ip6tnl | IPv6 | None | IPv4/IPv6 |

| vit | IPv4 | IPsec | IPv4 |

| vit6 | IPv6 | IPsec | IPv6 |

| gre | IPv4 | GRE | IPv4/IPv6 |

| gretap | IPv4 | GRE | Ether + IPv4/IPv6 |

| ip6gre | IPv6 | GRE | IPv4/IPv6 |

| ip6gretap | IPv6 | GRE | Ether + IPv4/IPv6 |

| fou | IPv4/IPv6 | UDP | IPv4/IPv6/GRE |

| gue | IPv4/IPv6 | UDP + GUE | IPv4/IPv6/GRE |

| geneve | IPv4/IPv6 | UDP + Geneve | Ether + IPv4/IPv6 |

| erspan | IPv4 | GRE + ERSPAN | IPv4/IPv6 |

| ip6erspan | IPv6 | GRE + ERSPAN | IPv4/IPv6 |

Note: All configurations in this tutorial are volatile and won’t survive to a

server reboot. If you want to make the configuration persistent across reboots,

please consider using a networking configuration daemon, such as

NetworkManager, or

distribution-specific mechanisms.

Author Hangbin Liu

LastMod 2019-10-18 (816d4dc)